News

Insup Lee and Insik Shin have been honored with the Influential Paper Award at RTSS 2025 for their paper,

“Periodic Resource Model for Compositional Real-Time Guarantees.” Insup attended the event to accept the award in person.

The paper, originally developed with Insik during his PhD at Penn, transformed hierarchical real-time scheduling. Its framework remains foundational more than twenty years later and continues to guide researchers and practitioners in designing safe, predictable systems.

The PRECISE Center thanks the RTSS and TCRTS committees for recognizing this impactful work.

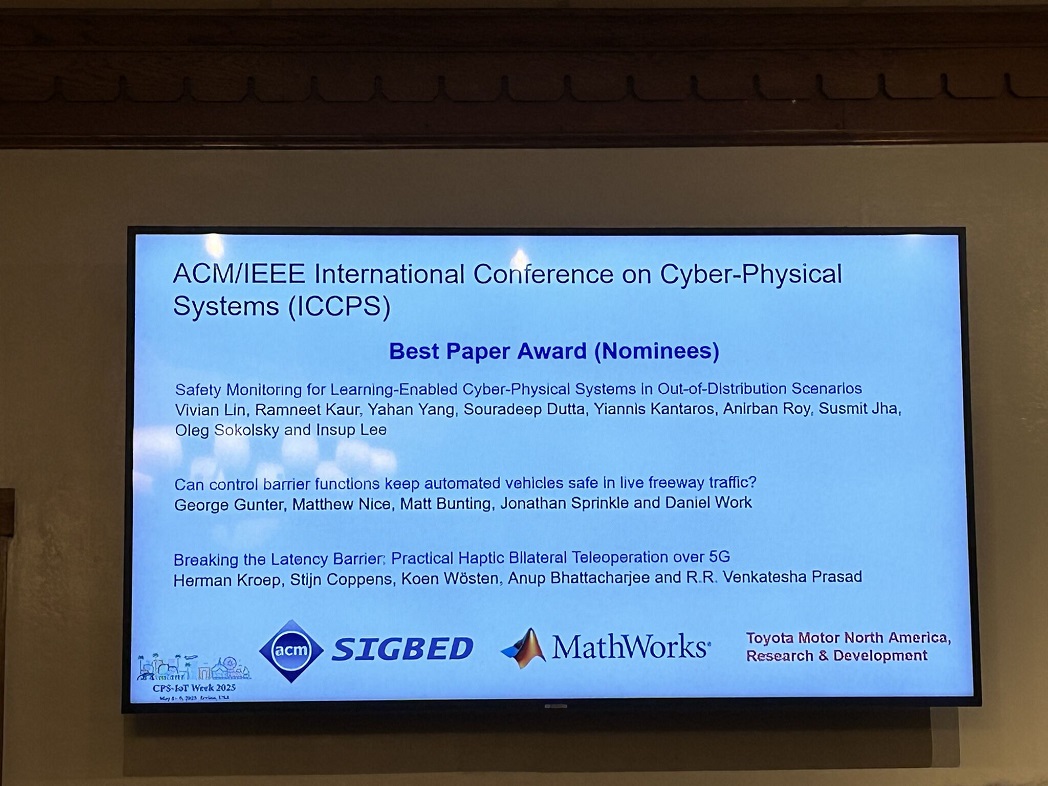

We are pleased to announce that the paper “Safety Monitoring for Learning-Enabled Cyber-Physical Systems in Out-of-Distribution Scenarios” has been nominated for the Best Paper Award at the 16th ACM/IEEE International Conference on Cyber-Physical Systems (ICCPS), held during CPS Week 2025 at the University of California, Irvine.

Authored by Vivian Lin, Ramneet Kaur, Yahan Yang, Souradeep Dutta, Yiannis Kantaros, Susmit Jha, Anirban Roy, Oleg Sokolsky, and Insup Lee, this work addresses a critical challenge in the deployment of autonomous systems: ensuring safety when these systems operate under conditions not seen during training. The paper introduces a novel runtime monitoring framework that provides formal safety guarantees in such out-of-distribution scenarios - an important advancement for the reliable use of learning-enabled components in safety-critical applications.

The full paper is available here.

We congratulate the authors on this well-deserved recognition and their contribution to the advancement of trustworthy cyber-physical systems.

In many machine learning applications—such as robotic learning—there is limited data for a given task or platform. A natural solution is to train a shared model to extract generally useful information across tasks. However, standard deep learning optimization algorithms can fail to find good solutions, even in the simplest settings.

The Challenge:

Multi-task transfer learning is a key desideratum in large-scale and safety-critical applications, yet unforeseen issues in their optimization can compromise performance and safety. Thomas Zhang, our 5th-year PhD student (advised by Nikolai Matni), identifies these fundamental failures and introduces a family of algorithms that provably fix them.

Why This Matters:

These failures had previously flown under the radar due to ubiquitous but unrealistic assumptions in prior theoretical work. By establishing a new perspective to design and analyze multi-task learning algorithms, this work takes a crucial step toward making safety-critical applications more reliable and trustworthy.

Beyond Robotics & Autonomous Systems:

Our insights extend beyond self-driving cars and robotics—they apply broadly across deep learning. We identify a bottleneck in neural network optimization that affects many architectures, leading to potential advances in:

• New deep learning optimizers

• Better understanding of normalization layers

• Improved representations for multi-modal vision/language models

Read his full paper here: https://lnkd.in/exB4H5W3

Last Friday, Jean Park, our 3rd-year PhD student, presented her research at AAAI 2025!

Missed her poster session? Check out her work on multimodal AI reasoning here: https://lnkd.in/eciY6peu

Jean introduces the Modality Importance Score (MIS)—a pioneering metric that quantifies each modality’s contribution to answering a question. This breakthrough exposes biases in VidQA datasets and drives AI toward true multimodal understanding.

THE PROBLEM

Despite advancements in Video Question Answering (VidQA), many AI models fail to truly integrate multiple modalities. For instance, in a medical setting, if a patient verbally denies drinking while their spouse subtly nods in disagreement, today’s AI might overlook the contradiction. This happens because existing datasets enable models to rely on biases rather than truly reasoning across modalities—posing risks in healthcare, security, and autonomous systems.

THE INNOVATION

MIS is the first quantitative measure of a modality’s importance in answering a question. It:

• Uncovers hidden biases in VidQA datasets and models

• Provides more practical and scalable method compared to manual annotation

• Guides the development of more balanced, robust multimodal datasets

• Holds promise for researchers to build AI that actually integrates multiple signals—rather than relying on single-modality shortcut

As Multimodal Large Language Models (MLLMs) are rapidly deployed in real-world settings, biased training data means biased models. MIS isn’t just an academic contribution—it’s a practical tool that can revolutionize industries where integrating multiple modalities is critical:

• Healthcare – Ensuring AI correctly interprets verbal + visual cues in diagnostics and patient interactions

• Film & Media – Enhancing AI-driven scene understanding and video summarization

• Autonomous Driving – Improving how vehicles synthesize multimodal sensor data for decision-making

This work was advised by Professors Eric Eaton, PhD, Insup Lee, and Kevin Johnson, whose guidance was instrumental in shaping Jean's research.

We are excited to announce that our paper has been accepted in Transactions on Machine Learning Research (TMLR)!

What’s it about?

Our work tackles a fundamental challenge in Imprecise Probabilistic Machine Learning: how to empirically derive credal regions (sets of plausible probabilities) without strong assumptions. By leveraging Conformal Prediction, we introduce a new method that:

• Provides provable coverage guarantees

• Reduces prediction set sizes

• Allows to disentangle different types of uncertainty (epistemic & aleatoric)

Breaking new ground:

Previous approaches required the consonance assumption, which was required to relate conformal prediction and imprecise probabilities. Our method removes this constraint and introduces:

• A practical approach to handling ambiguous ground truth, allowing predictions even when labels are uncertain

• A calibration property that ensures the true data-generating process is included with a high probability

This breakthrough enables reliable uncertainty estimation in real-world applications!

Why does it matter?

This work is a step forward for trustworthy AI. Understanding when and how to trust model predictions is critical for deploying AI in:

• Healthcare – Ensuring diagnostic models acknowledge uncertainty

• Autonomous Systems – Improving decision-making in uncertain environments

• Finance & Risk Assessment – More reliable probabilistic forecasting

A true collaborative effort!

This paper is the result of a fantastic collaboration between:

• Michele Caprio (Former Postdoc at the PRECISE Center/Penn, now at The University of Manchester)

• David Stutz (Google DeepMind)

• Shuo Li (5th-year PhD Student at the PRECISE Center/Penn Engineering)

• Arnaud Doucet (Google DeepMind)

What sets our work apart:

There’s existing research on imprecise probabilities and uncertainty quantification with ambiguous ground truth. Here’s why our approach stands out:

• Smaller Prediction Sets – More precise info for decision-making

• Fewer Assumptions – True label coverage without assuming data distribution

• Uncertainty Decomposition – Separates aleatoric & epistemic uncertainty—something previous methods couldn’t achieve

We’re incredibly proud of the innovative ideas that emerged from this partnership between the PRECISE Center and Google DeepMind.

Check out our paper here: https://lnkd.in/eFDD-PcB

How do we teach AI to think on its feet? Imagine robots and agents that can not only adapt instantly to new environments but also take on entirely new tasks without needing any retraining.

The Problem:

Most general AI agents in robotics and gaming stumble when faced with new tasks or unfamiliar settings. Even after pretraining large policies on massive datasets, they are inflexible.

Our Solution:

Project REGENT shows that retrieval-augmented general AI agents can:

• Adapt to unseen robotics tasks and games—with zero training in these new scenarios.

• Retrieve relevant data on the fly, throw it into context, and perform like pros.

By learning to combine retrieval-augmentation and in-context learning (inspired by LLMs like GPT but applied to robotics), REGENT is:

• More resource-efficient (in parameters and pre-training data).

• Scalable for real-world applications.

• A step closer to building agents that adapt like humans.

Why It Matters:

Think about a robot trained to assist in your kitchen. Can it handle a new kitchen, adapt to a task in the living room, or even work in a factory setting?

Project REGENT is building toward a future where robots (physical agents) and digital assistants can:

• Seamlessly adapt to any environment.

• In-context learn tasks in real time.

• Unlock new possibilities in industries like manufacturing, logistics, and home automation.

Project REGENT wouldn’t be possible without the work of Kaustubh Sridhar and Souradeep Dutta, with the mentorship of Dinesh Jayaraman and Insup Lee. Excited to present this at ICLR 2025!

Learn more about our work here: bit.ly/regent-research

Congratulations to our alumna Elizabeth Dinella (CIS PhD, 2023; now faculty at Bryn Mawr College) for receiving the 2025 ACM SIGSOFT Outstanding Dissertation Award!

Her thesis, "Neural Inference of Program Specifications," explores how deep learning can address key challenges in automating program reasoning. Elizabeth completed this work under the guidance of her advisor, Professor Mayur Naik, during her time at Penn Engineering. Below is the full summary of her work:

In an ideal development setting, programmers could leverage automated tools that efficiently predict program behavior, identifying potential bugs, security vulnerabilities, and readability issues. Decades of research in program reasoning yielded many fruitful techniques grounded in rules and formal logic. However, significant barriers exist to achieving fully automated effective program reasoning tooling. Firstly, many techniques require a correctness property to check against. Secondly, many techniques struggle to scale in the presence of constructs widely seen in real-world programs.

Elizabeth’s research explores the idea that neural inference of specifications can overcome fundamental roadblocks in automated program reasoning. By applying statistical techniques to a variety of program reasoning tasks—including static bug finding, merge conflict resolution, and automated testing—her work demonstrates the promise of deep learning-based specification inference and its implications in classical software engineering problems.

Congratulations again to Elizabeth on this well-deserved recognition!

Congratulations to PRECISE Center postdoc Michele Caprio, who has been elected a member of the London Mathematical Society, a preeminent organization in the UK for advancing and promoting mathematical knowledge! This is just one of Michele's impressive accomplishments of late: He was also selected as the recipient of the 2024 New Researcher Travel Award by the Institute of Mathematical Statistics (IMS) for his work on finding tight bounds between the Kullback Leibler divergence and the Total Variation metric between distributions of different dimensions.

Kudos to Michele for the well-deserved accolades!

PRECISE faculty member George Pappas has been elected to the National Academy of Engineering (NAE) for his work on the analysis, synthesis and control of safety-critical cyber-physical systems, including self-driving cars and autonomous robots.

Election to the NAE is one of the highest professional honors for an engineer – Congratulations, Professor Pappas for being recognized for your long career of groundbreaking work!

Nikolai Matni received the 2024 Young Investigator Program (YIP) Award from the Air Force Office of Scientific Research (AFOSR). He is one of the 48 scientists and engineers from 36 institutions and businesses in 20 states chosen this year. The title of Nikolai's proposal is "Towards a Statistical Learning Theory of Nonlinear Control", funded under the topic area of Dynamical Systems and Control. This highly competitive award recognizes Dr. Matni's exceptional ability and promise for conducting basic research of military relevance.

Congratulations!